The idea of working in complete darkness was exciting, but I had a hard time coming up with a system that would successfully translate to that situation. When I began working with the Kinect for my conference project, I realized I could use the Kinect for Blackspace. The thought never occurred to me before, but once I discovered that the Kinect works with an infrared camera and calculates depth it became the perfect project.

The idea of working in complete darkness was exciting, but I had a hard time coming up with a system that would successfully translate to that situation. When I began working with the Kinect for my conference project, I realized I could use the Kinect for Blackspace. The thought never occurred to me before, but once I discovered that the Kinect works with an infrared camera and calculates depth it became the perfect project.

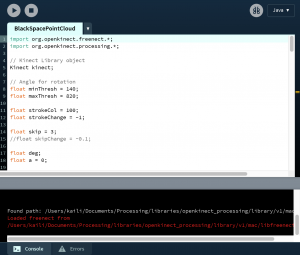

I had only just begun figuring out the Kinect and at the time the possibilities seemed endless. However, I was very limited when I began due to the fact that 1. I was working with the Kinect version 1 which holds less capabilities than the second and 2. I had no idea how the “language” for the Kinect libraries worked. Daniel Shiffman’s Open Kinect for Processing libraries helped a great deal and provided complete examples for various Kinect projects such as Point Tracking and Depth Testing. It was all very overwhelming, but with time I began to understand how each of the examples functioned.

I had only just begun figuring out the Kinect and at the time the possibilities seemed endless. However, I was very limited when I began due to the fact that 1. I was working with the Kinect version 1 which holds less capabilities than the second and 2. I had no idea how the “language” for the Kinect libraries worked. Daniel Shiffman’s Open Kinect for Processing libraries helped a great deal and provided complete examples for various Kinect projects such as Point Tracking and Depth Testing. It was all very overwhelming, but with time I began to understand how each of the examples functioned.

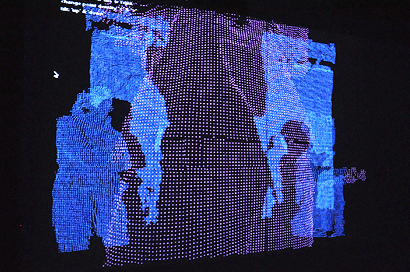

The one example that stood out to me was the Point Cloud example. A point cloud is basically a large amount of points that resemble the depth of a person or object in a 3D space. Shiffman’s Point Cloud was white on a black background and rotated, giving various perspectives of whatever the camera was seeing. It seemed like the most interesting and interactive, so I decided to alter his Point Cloud for my Blackspace presentation. Working with the Kinect required an understanding of the machine itself as well as the logic of depth and distance. The new vocabulary and functions provided a great challenge for me. I had to study and interpret someone else’s code rather than one I’ve written myself. Perhaps one day I’ll be able to create my own codes with the Kinect, but not for Blackspace.

The end result is a non-rotating point cloud on a black background. The points are pink when a person is closer to the Kinect, and blue if they are further away. It is a simple idea, but one that I thought would be fun and interactive for the whole class. The reason I decided to use two colors to represent the depth is because it felt more gratifying that way. People want to see results and changes, so the change from pink to blue is a fun one to watch. I also added various keyPressed() options that altered things such as changing the stroke of each point in the sketch, changing the point density (how concentrated or spread out the points are), and the tilt of the camera. I felt the project was received well and was fun for everyone. It was fun to see how everyone’s individual movements helped create and alter the sketch.

I believe my project is a system due to the fact that it follows the “simple rules lead to complex phenomena” aspect of a system. The rules are simple: draw points wherever there is an object and if that object is close, make it pink, and if it is further, make it blue. However, the entirety of the system itself is complex in that there are many things to be taken into account such as object/person position, camera position, location, and movement speed. It is not self-evolving I don’t think because it does not evolve over time on its own, we cause the changes and they are reflected back to us instantly. I suppose in order to make it self-evolving there would have to be change within the code itself over time that cannot be controlled, simply followed.