Categories

Tag: update

Interactive City: Emotion Catcher

My original idea was to physically create an emotion catcher. I wanted to have three jars, each one hooked up to a touch sensor that would light up the jar when the metal lid of the jar was touched. Each…

Interactive City: Photomosaic

After the in-class critique, we decided to make a few changes to our project. Originally, we had planned on creating two photographic mosaics that formed one large photo of a student and one large photo of the SLC campus. We…

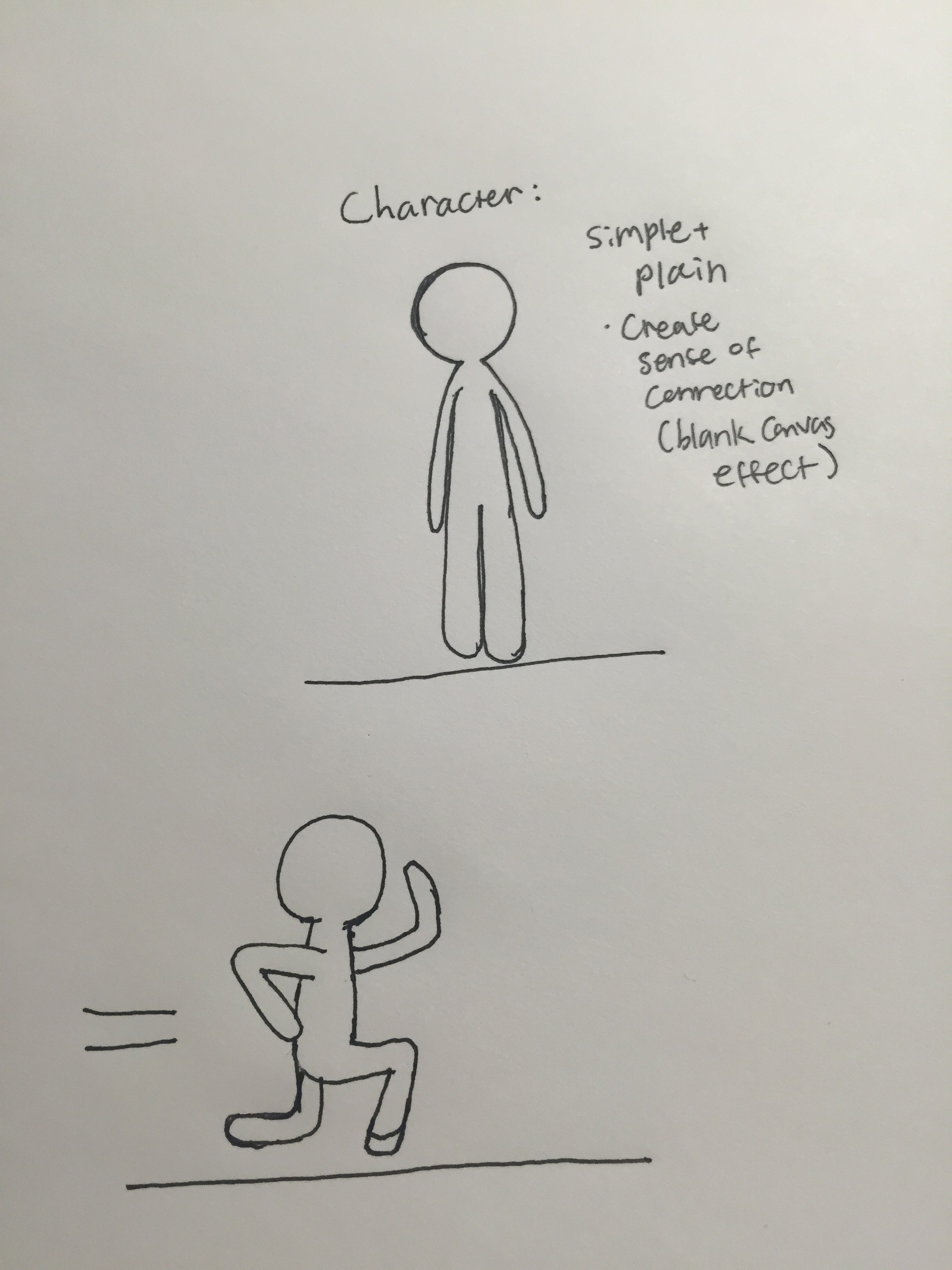

Interactive City: Run Away With Me

I have been working on character’s movement in the game. I am in process of using motion detection coding to make character move forward and forward only. This turned out to be the biggest hurdle I have to jump over….

Interactive City: Rise and Fall

I have been working on my project, and I created an Array for my conference project, in order to store all the information of the leaves. An Array allows for a lot of data to be stored and retrieved in…

Interactive City: Moving Clarity

Playing with OpenCV for Processing reference and examples, I have created a program that successfully identifies the motion detected area of interest within a webcam feed, and draws to it. This was done using the the setBackgroundSubtraction() and findContours() methods in…

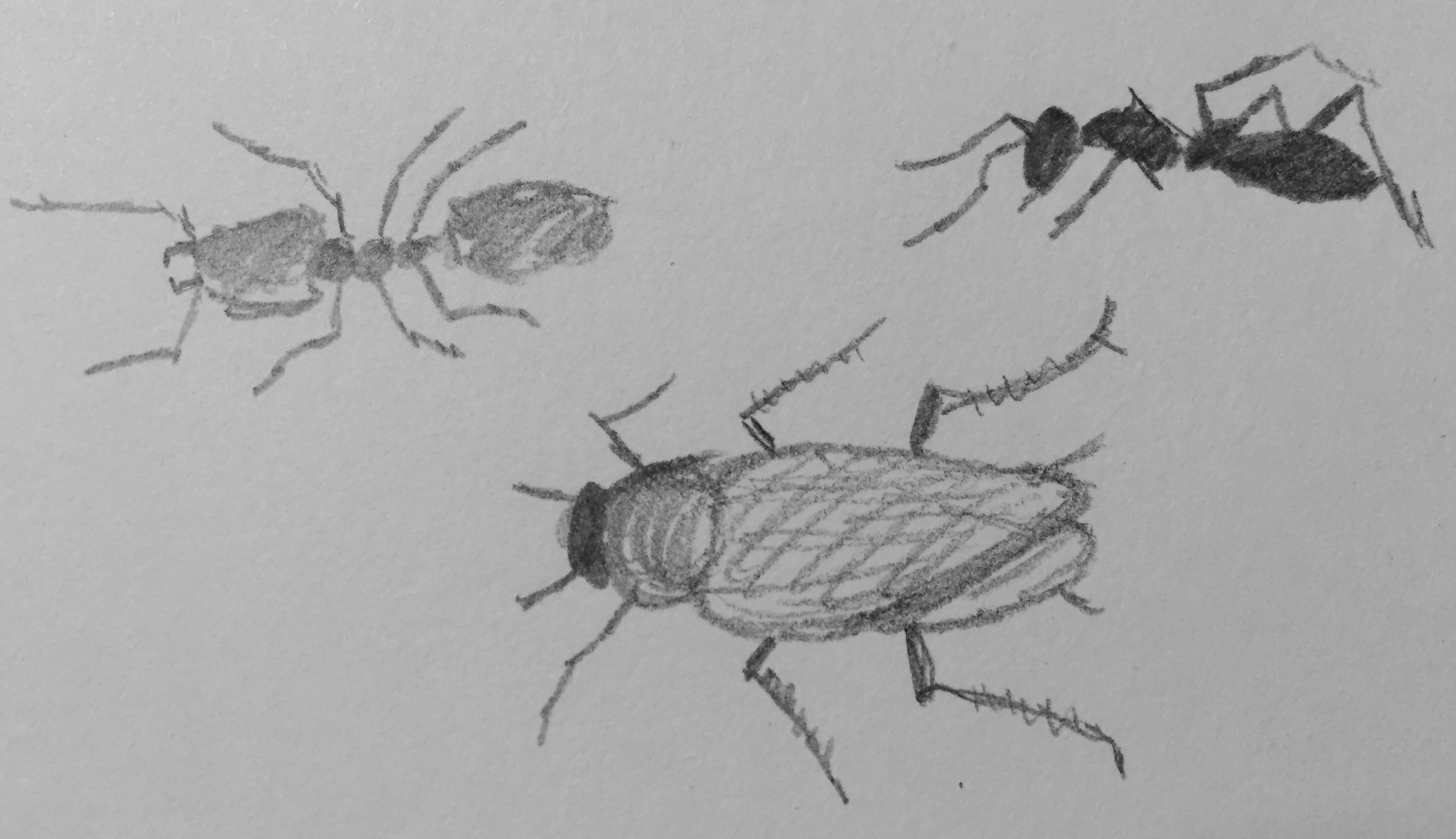

Interactive City: Please Disturb

Previously in my conference post I stated that the animals I am trying to produce would be a collection of animated actions. However, upon reconsidering the process of actually animating the animal sketches I would be making, it seems more…

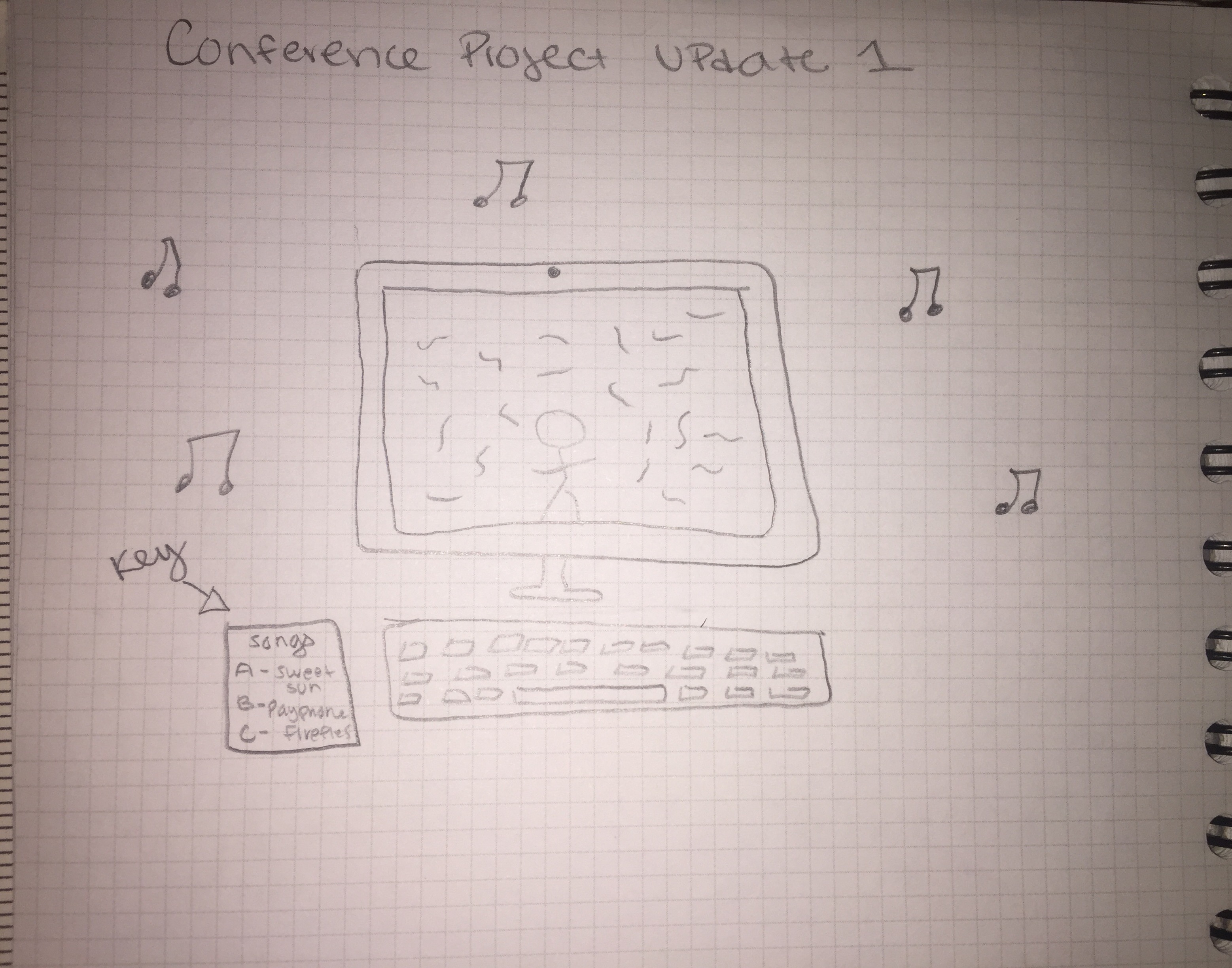

Interactive City: Music in Me

A few changes have been made regarding my conference project “Music in Me”. For example, I changed the song choices that the user can play. Instead of playing Sweet Sun, Gooey, and Breezeblocks, that are all very similar , I am…

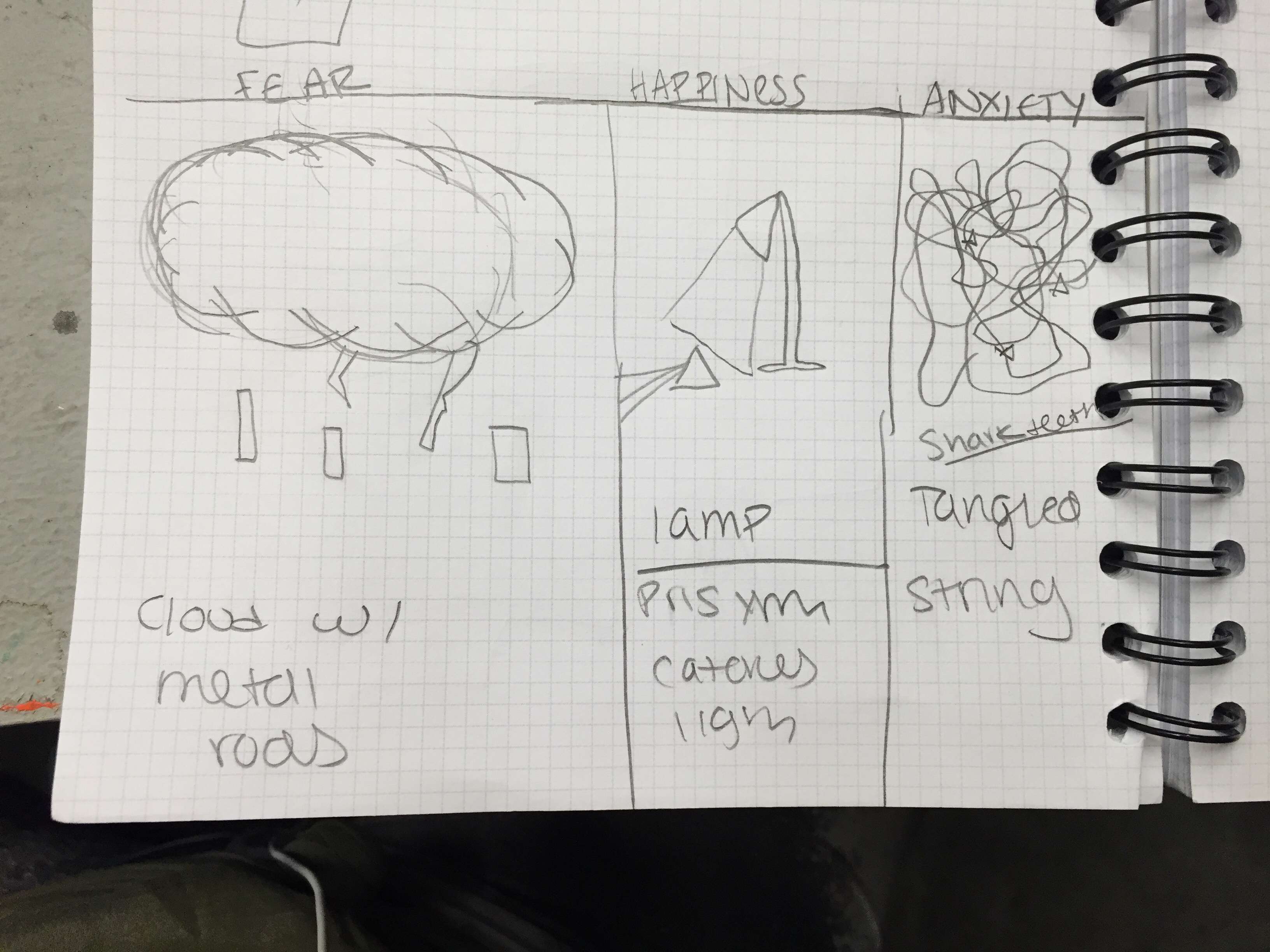

Interactive City: Find Your Mood

I started working on the home screen of my digital mood ring. I added a sparkle background to give a magical feel and lava lamp like blobs moving up and down the screen. I made the blobs in photoshop and…