Categories

Tag: systems

System 3: Infinitesimal

For my final system I built off of our cellular automata code, replacing the squares with text. I also put a transparent black background so new iterations would only compile over old as opposed to completely replacing. Pictured above is the…

Systems Aesthetics: Kinect + Processing

For my conference I always knew I wanted to do something interactive. Interactivity is probably the most fun I can have with programming, I love the gratification of being so involved in a system. I came into this class with…

Systems Aesthetics: A Later System: Varied Connections

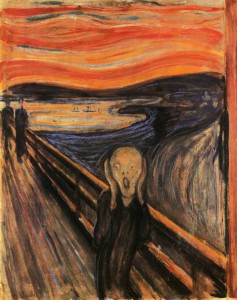

My system 3 consists of various shapes created at random, with the center of each shape connected to one “main” shape via a line. As the shapes move around and collide with each other, they are then sent in other…

Systems Aesthetics: A Later System, princess_me.png

princess_me.png is my attempt to make an infinite glitch system with a picture of a princess. Infinite in this case meant that the system doesn’t end. When trying to program the system, i kept getting this screen. I realize after…

Systems Aesthetics: A Later System Video Glitch

My system glitches live stream video from the computer’s web cam. The project was a direct response to a glitch code developed in class that altered pixels of a given image. Since then I was determined to create a similar…

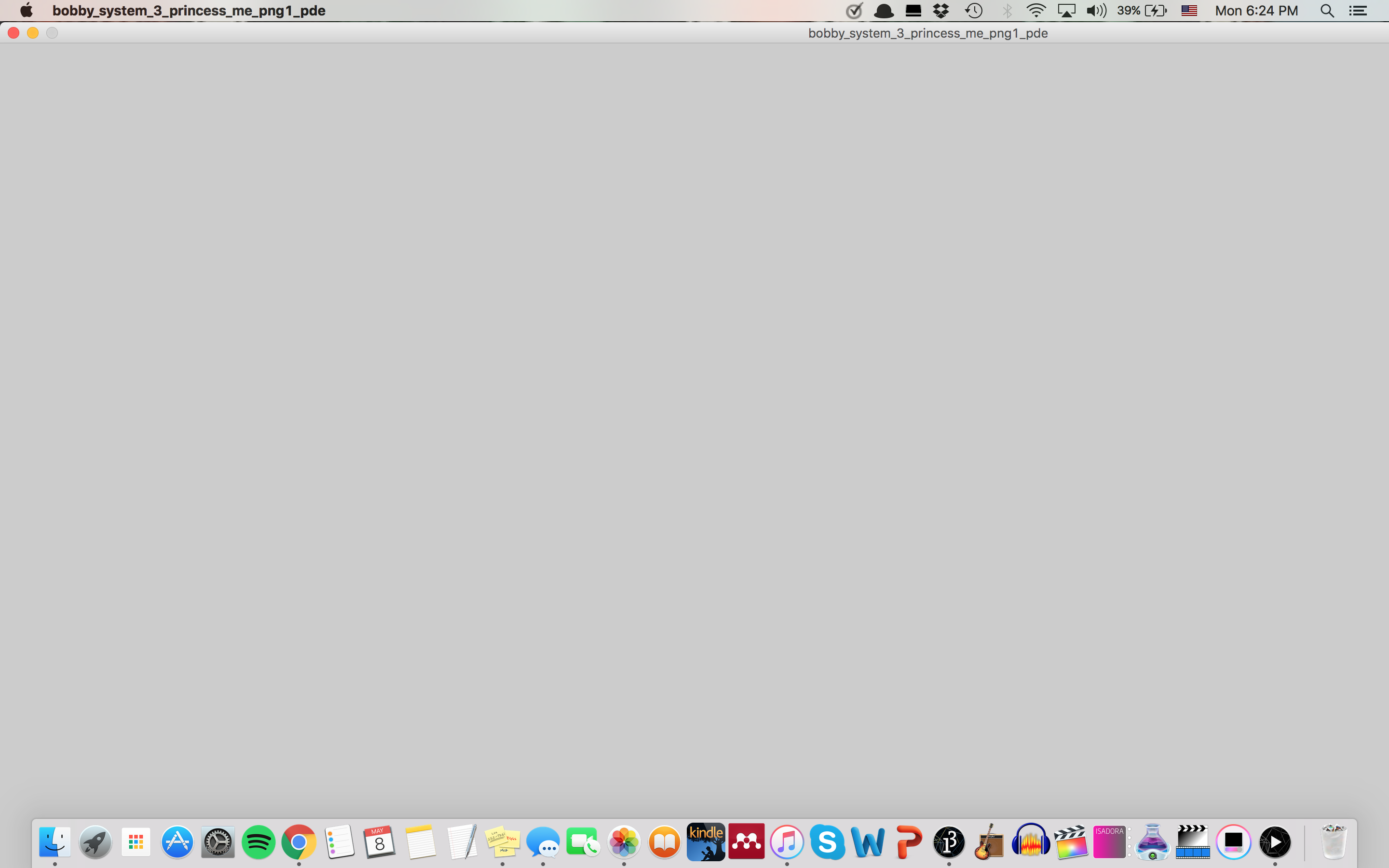

Systems Aesthetics: System 3

Name these 3 paintings. Watch my system. Now name them again. For system 3, I decided to glitch three famous artworks. These artworks can be recognised worldwide and by all no matter ones prior art history knowledge. Whether one knows…

Systems Aesthetics: An Early System

As our readings have progressed as has the class’ understanding of systems, and what attributes they need in order to be classified as a ‘System’. My first system had the characteristics of an extremely simple system however was missing extra…