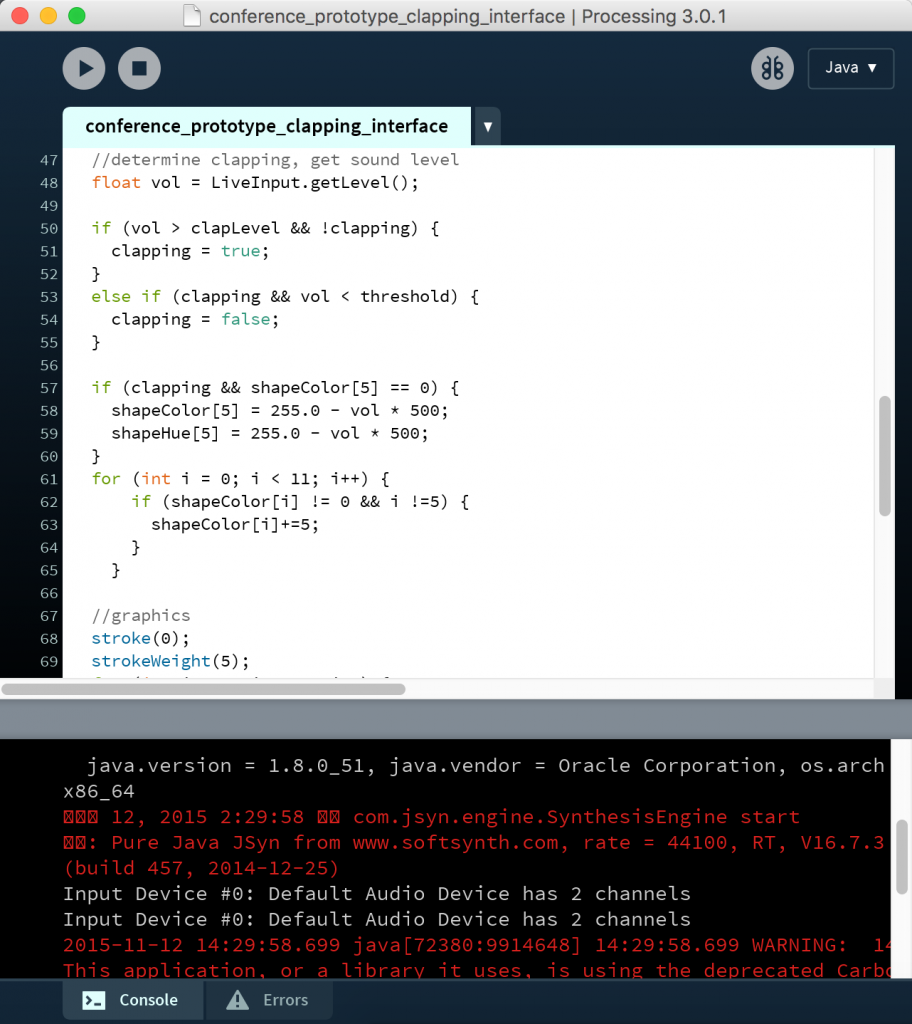

So far, I learned how to use the Sonia library in Processing to monitor the level of sound input from my laptop microphone. In the gif above, I try to simulate a belt that transports shapes from left to right. Every time the microphone detects a “clap”, the shape in the center will be colored, while the volume of clapping sound as a parameter to determine the color’s hue.

The Sonia library allows me to get the sound level of the microphone input. I set up two thresholds to determine the action of “clapping”: if the sound level gets above a threshold, the program will consider someone is “clapping”; after clapping, if the sound level drops below a threshold, the program will convert the status from “clapping” to “not clapping”.

I plan to use this interface to simulate an interactive drum-hitting experience. I need to add more parameters into the program as well as work on the feedback of this interface.